Monocular vision SLAM

Some related discussion on OpenGL boards

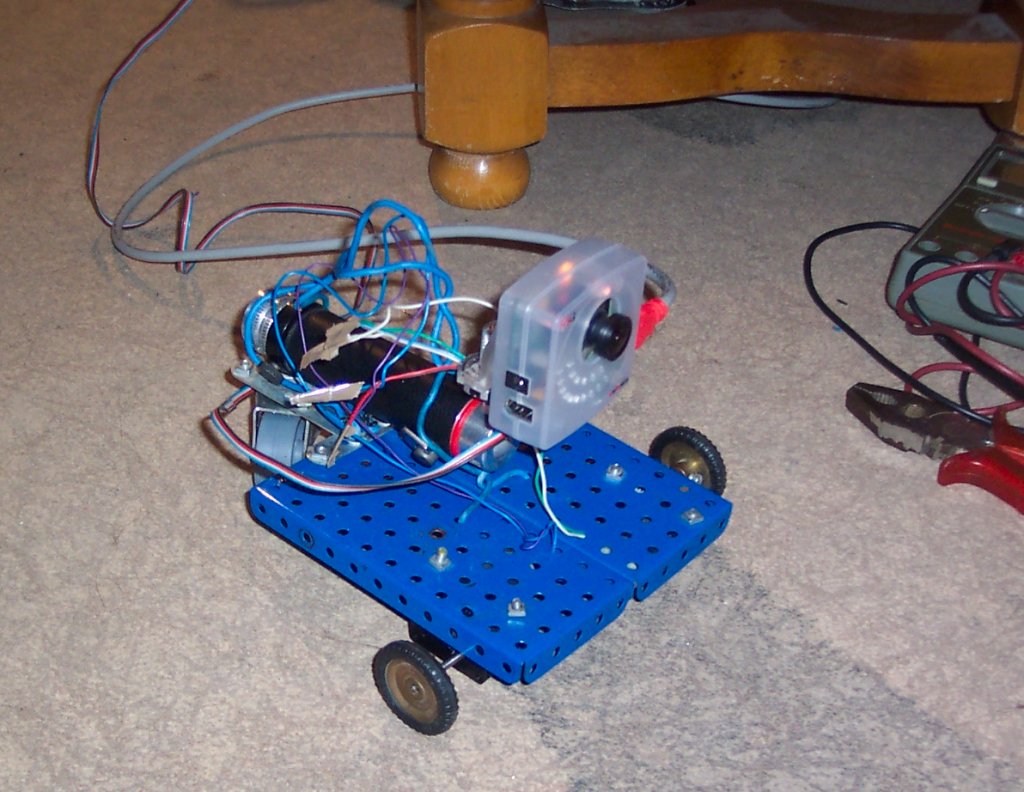

Aim is to do an autonomous vacuum cleaner that can pass everywhere but efficiently (ie. contrary to Roomba), by doing Simultaneous Location And Mapping with monocular vision. The only sensor would be a single camera, and maybe some basic odometry and/or moustaches.

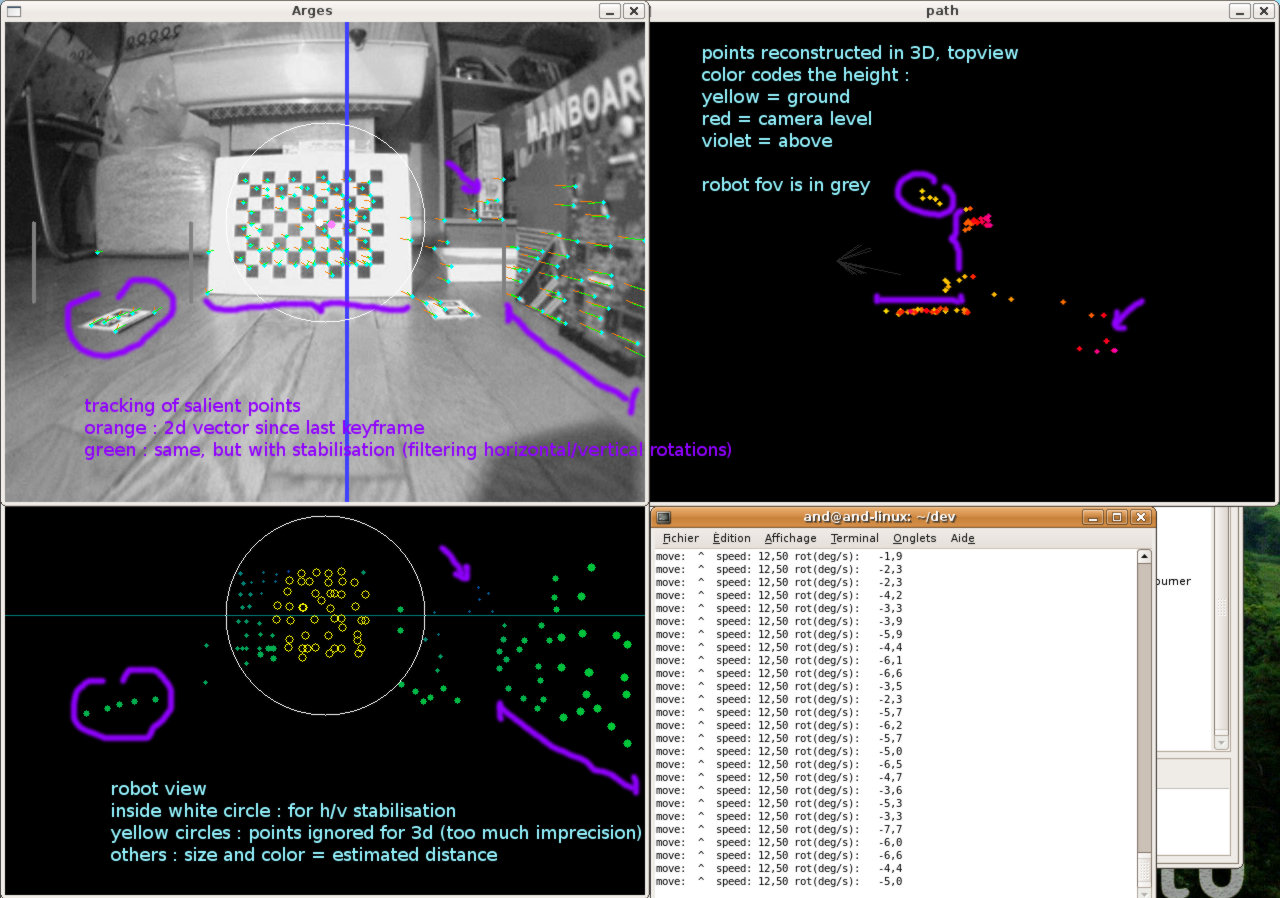

Current prototype : the video is captured during manual operation of Arges the monocular robot.

3D SLAM is done offline on recorded video, with almost realtime performance :

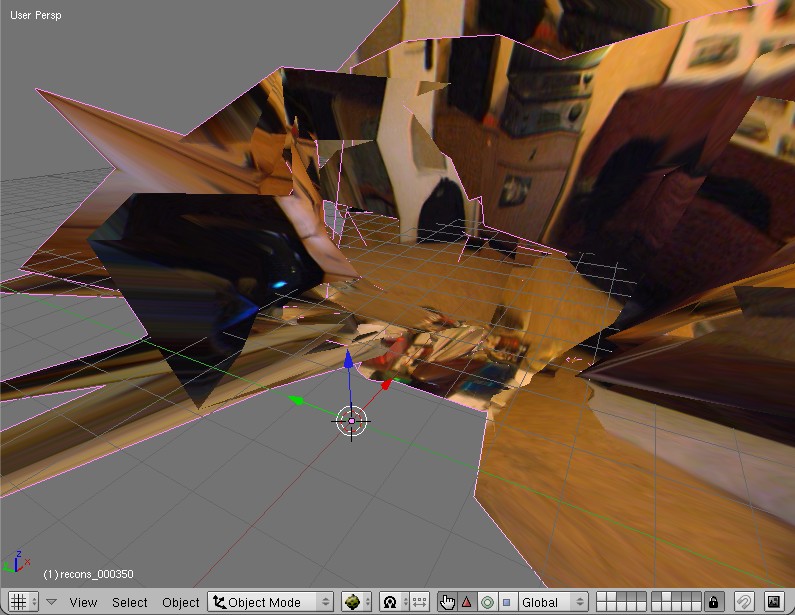

(Different scene) A few 3D keyframes captured and automatically assembled in Blender :